Meta knows a lot about you. OpenAI will know more. If it doesn't already...

"A journal that can speak with you, that can help you reflect, is a fantastic tool. But it’s also a privacy nightmare."

A few years ago, I made the drastic decision to abandon all Meta products. I quit because I was uncomfortable with such a large degree of my social life running through the company. Here in Europe, WhatsApp is the default messaging app, and back then, very few people used other apps. Even between iPhone users, it was much more common to use WhatsApp than iMessage. WhatsApp’s biggest competitor was probably Facebook Messenger. Despite Meta’s dominance, I made the jump, and I disappeared from group chats and my local music network.

These days, I’m back on Instagram and WhatsApp — partly for mental health reasons. I know that sounds ironic, given that many people ditch Instagram for their wellbeing, but it allowed me to feel less isolated at the tail-end of the pandemic. I could jump back into group chats of friends making plans, and through Instagram Stories I could learn what events were happening in my local scene or the communities that introvert-me likes to dip in and out of.

I am still so irked by the privacy implications of running so much of my social life through one company. Imagine if Meta were a post office, and every post or DM was a letter. That’s not just what you send, but also everything that’s sent to you, by scrolling through reels, stories, and posts. Now imagine that post office keeps a copy of ALL OF THAT and opens all your envelopes. That is simultaneously Meta and a description of the East German government’s notorious Stasi security apparatus’s wet dream.

But the Stasi’s wet dream is nothing compared to the oceanic proportions of what OpenAI’s ChatGPT is enabling.

Journals 2.0

I recently came across a Reddit thread in which a person lauded ChatGPT’s therapeutic abilities. With ChatGPT, OpenAI has created a tool that can be a cross between a journal and a therapist. I hear about this use case so often that I imagine there are hundreds of thousands of people, if not millions, using ChatGPT in this way.

In fact, ChatGPT has recently been in the news after it released a particularly sycophantic update, which led users to accuse the app of being dangerously encouraging.

I don’t fault anyone for using technologies like AI in ways that support them. A journal that can speak with you, that can help you reflect, is a fantastic tool. But it’s also a privacy nightmare.

Privacy concerns

Therapists use secure platforms to talk to their clients. That’s tools like TherapyNotes, doxy.me, VSee, and tech giants like Zoom, Google and Microsoft also all have communication tools that comply with healthcare-related privacy laws. OpenAI doesn’t. Its privacy practices are… questionable. And shrouded in mystery.

Last December, Italy fined OpenAI 15 million euros and ordered them to run a six-month media campaign “to raise public awareness about how ChatGPT works, particularly as regards to data collection of users and non-users to train algorithms.” Italy’s privacy watchdog found that OpenAI processed people’s personal data to:

“train ChatGPT without having an adequate legal basis and violated the principle of transparency and the related information obligations towards users.”

Remember the recent AI action figure frenzy or the great Ghibli AI flood of 2025? People who uploaded photos of themselves not only shared the image to be used as training data, but also included EXIF info, such as the exact place and time where the photo was taken. But you don't even need to upload your photos to end up in ChatGPT. In 2022, one artist found their private medical record photos in a popular AI training set.

Once you disclose information, or if someone collects information about you, it is no longer in your control. The chance of that information spreading further increases based on the number of copies available and the number of people who have access to those copies.

Facebook found out about this the hard way through, among other things, the Cambridge Analytica scandal, which saw 87 million people’s data harvested through a third-party app. The data was detailed enough to construct psychographic profiles that could be tied to each person’s name and location.

Still, the data Zuckerberg has been collecting pales in comparison with what people entrust ChatGPT with. That ranges from work-related data, all kinds of stuff people would normally Google, the expression of people’s most outlandish fantasies through image creation, help with personal emails and messages, and secrets from the depths of their psyche that they may not even share with their closest friends. All in one place.

Why privacy is a human right

Companies like Meta, Google, and now OpenAI know a lot about you. With the amount of data they have about you, they likely know more than any individual friend or partner. They know everything you watch, what photo made you stop scrolling and for how long, what you type (including that which you don’t post), what you search, delete, and they know this about all of your friends too. They know what devices you use, where you use them, in what WiFi networks, and who else has connected to those networks — even if you don’t know those people.

This is a lot for one entity to know about you. Imagine a single person, a stranger, knowing all of this about you, but you know next to nothing about them. This gives them a certain power over you, which can be used against you. Blackmail is a common form of cybercrime, but we also have to consider a global shift towards more authoritarian governments.

There is a reason why the right to privacy is mentioned in 186 constitutions worldwide. In this case, it’s more complicated: these are organisations with many employees, and even if all of them are trustworthy, they’re all potential points of failure for actors with bad intentions. And of course, companies with good stewardship can be bought by people whom you may not trust with your info. *cough* Twitter *cough*

What you can do

My primary goal of this piece is to illustrate OpenAI’s trajectory, but you may now be wondering how to better manage your data. I recommend checking out the Electronic Frontier Foundation’s Surveillance Self-Defense guide, but let me also use a few pointers specific to ChatGPT:

Be aware of what you share. Assume that what you disclose may leak. That can be tomorrow, or that can be in 20 years. Keep in mind that even things you type, but don’t end up sharing, may be logged.

Be aware of what you click. Everything you do in the app is tracked, including which links you choose to open, e.g. if you’re doing research.

Avoid sharing information that could be used against you or others, should it become public.

Respect your friends’ privacy. Don’t put their addresses, full names, or other personal info into ChatGPT. Use nicknames to create more ambiguity.

Regularly clean ChatGPT’s memory feature. Does it really need to remember that you went on a holiday to Italy in 2023 with your ex-partner?

Use the temporary chats feature. OpenAI claims these chats won’t be used to train their models, are deleted from OpenAI’s servers after 30 days, and don't show up in your history or memory (source).

Stop OpenAI from using your data for training. You can opt out by using ChatGPT’s data controls. Imagine your private challenges from your Italian holiday somehow making their way into someone’s replies years down the line?

If using third-party apps with ChatGPT, be cautious. This is a Cambridge Analytica scandal waiting to happen all over again.

Keep yourself informed about potential risks. I highly recommend listening to the Darknet Diaries podcast to better understand why your data is valuable to actors with bad intentions, even if you think you have nothing to hide.

Another thing to consider is to completely delete your account every now and then. In many jurisdictions, companies are required to remove all of a user’s data in such situations. You can just create a new account and start fresh.

Did I miss anything? Let other readers know in the comments.

(- ‿- ) For your ears

I’ve recently found myself going back to an old ambient favourite by H.U.V.A. Network (Solar Fields & AES Dana): the 2009 album Ephemeris. At the time, I was really into ‘psybeint’ and ‘psychill’, which are downtempo genres with a psychedelic twist to them. This album is a great example of that. It has a nice build-up and eventually the journey leads into progressive trance, before slowing back down.

(◕‿◕✿) For your eyes

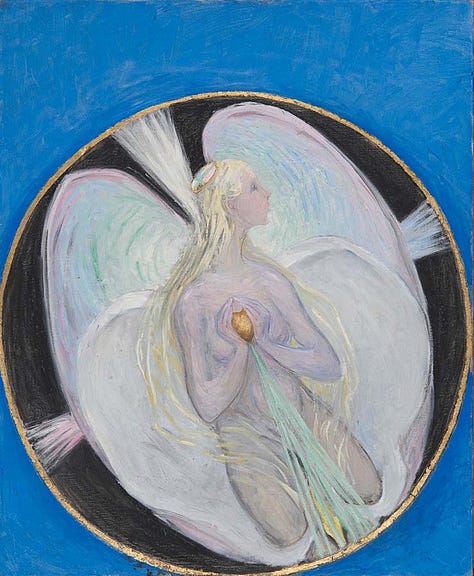

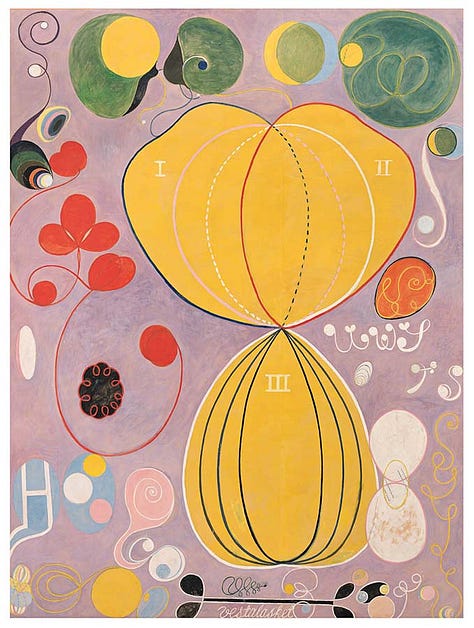

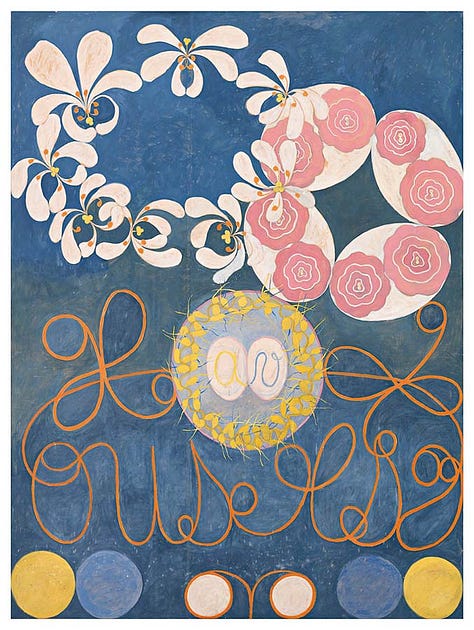

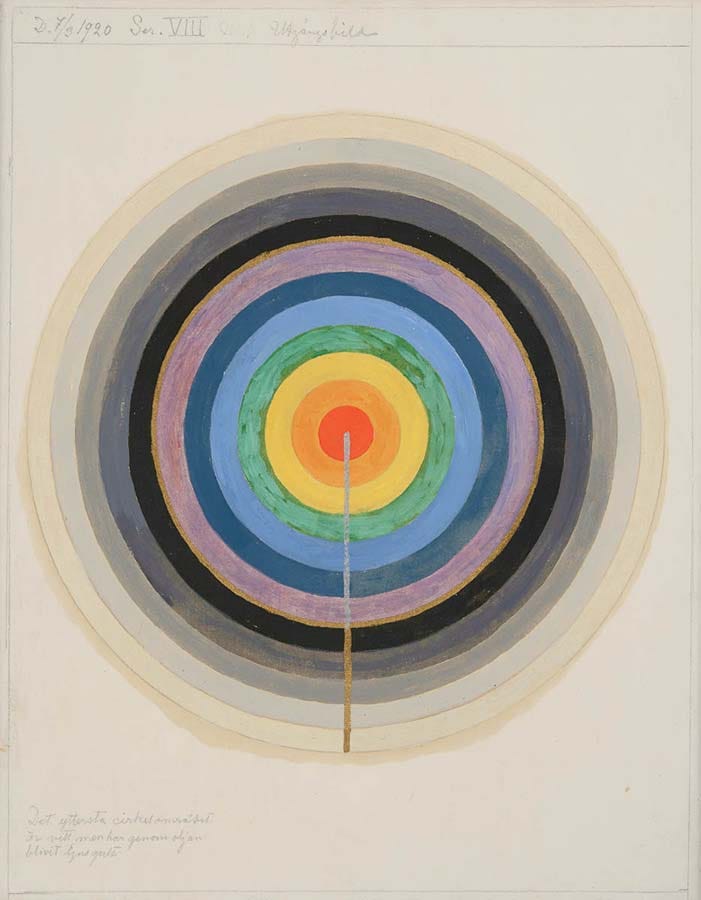

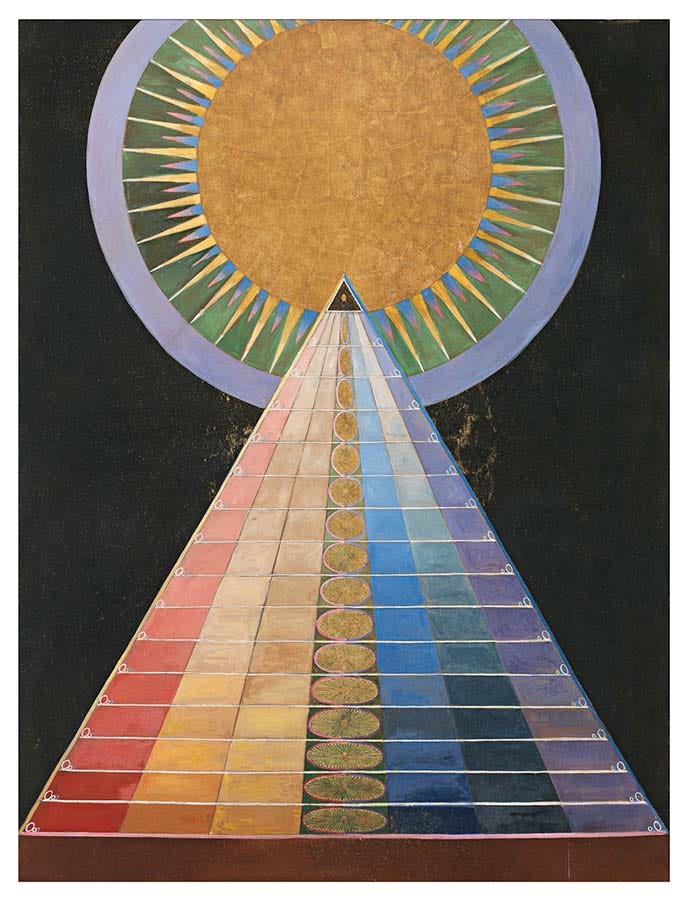

Kandinsky, Mondriaan, and Malevich weren’t the first to paint purely abstract compositions. The work of Swedish artist and mystic Hilda af Klint predates theirs, though it took decades after her death for it to gain serious attention.